Pytorch Check If GPU Is Available? Complete Guide!

In today’s world of machine learning and deep learning, having access to a GPU (Graphics Processing Unit) can significantly accelerate your model training. PyTorch, a popular deep learning framework.

You can check GPU availability in PyTorch with “torch.cuda.is_available()”, returning “True” if a GPU is accessible, enabling faster deep learning computations when available.

In this article, I will guide you on how you can check the availability of pytorch. We’ll guide you through the process step by step.

How do I check if PyTorch is using the GPU?

To check if PyTorch is using the GPU, you can use the command “torch.cuda.is_available()”. If it returns “True”, PyTorch can use the GPU; otherwise, it’s using the CPU.

Also read: Error Occurred On Gpuid: 100 | Reasons Behind and Solutions

Pytorch Check GPU?

To check GPU availability in PyTorch, use the command ‘torch.cuda.is_available()’. It returns a Boolean value indicating whether a GPU is accessible for computation.

Also read: What Is Normal Gpu Usage While Gaming? Optimize Now!

Requirements:

1. PyTorch Installed:

PyTorch is a popular machine learning library for Python, used for deep learning tasks such as neural networks and tensor computations

2. CUDA Drivers:

CUDA drivers are software components developed by NVIDIA to enable GPU-based computing. They are essential for running GPU-accelerated applications and are necessary for deep learning frameworks like PyTorch.

Also read: Do Both Monitors Need To Be Connected To The Gpu? Complete Guide!

Checking GPU Availability in PyTorch?

You can check GPU availability in PyTorch using the command “torch.cuda.is_available()” . It returns ‘True’ if a GPU is available, otherwise ‘False’.

Also read: Are GPU Fans Intake Or Exhaust? Optimize GPU Cooling!

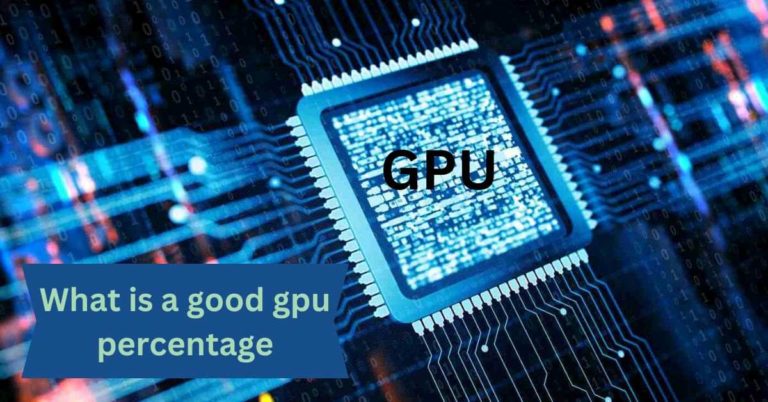

1. Import PyTorch:

To import PyTorch, use the following code in Python:

This line of code allows you to access PyTorch’s functionality in your program.

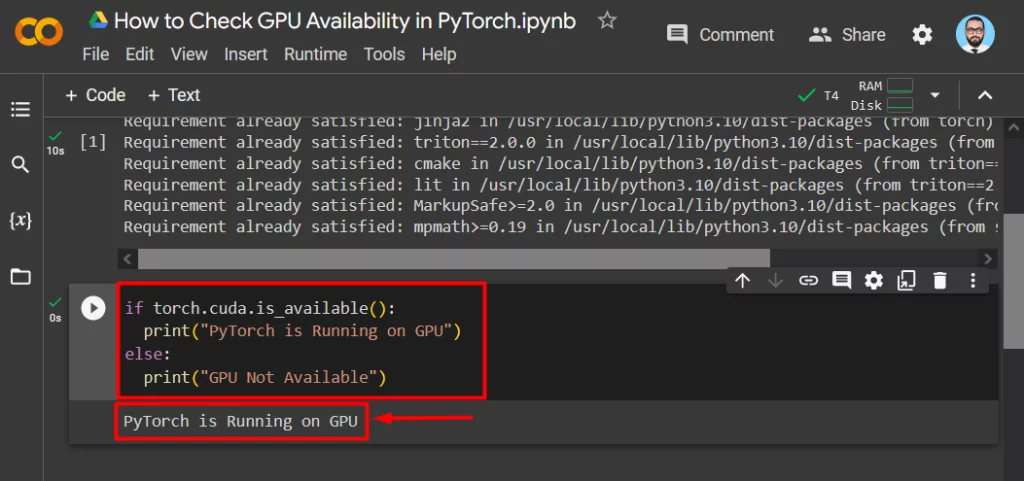

2. Check for GPU:

To check for the availability of a GPU in Python using PyTorch, you can use the following code:

This code will print whether a GPU is available for use in your system.

Also read: What GPU Can Run 240hz? Complete GPU Guide!

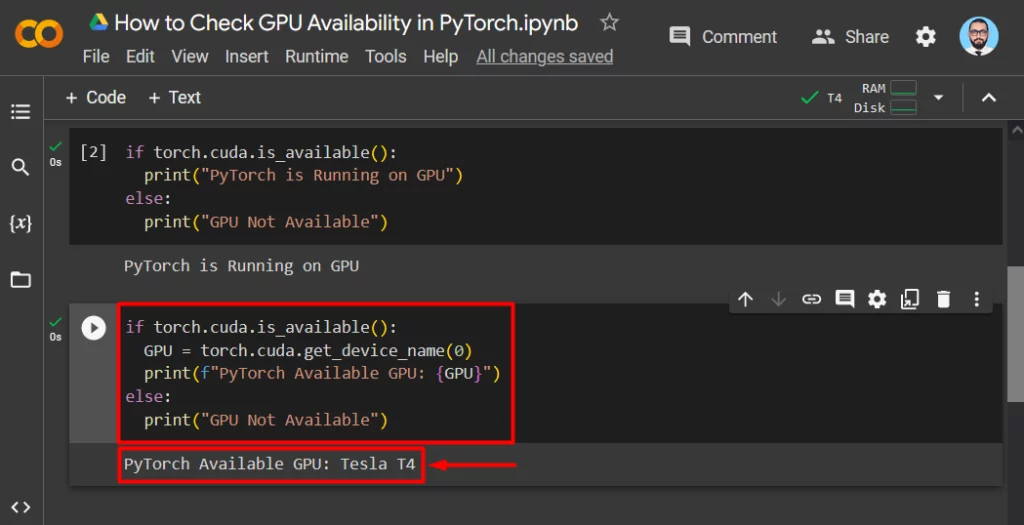

3. GPU Information:

To obtain GPU information in Python using PyTorch, you can use the following code:

This code retrieves and prints information about the available GPU, including its name, total memory, and compute capability if a GPU is available; otherwise, it indicates that no GPU is available.

Also read: Can A Motherboard Bottleneck A GPU – Ultimate Guide – 2024

Pytorch Check If Cuda Is Available?

To determine if CUDA is available in PyTorch, use the command ‘torch.cuda.is_available()’. This function returns a Boolean value indicating whether CUDA (GPU support) is accessible for computation.

Also read: Torch is not able to use GPU – Complete Guide – 2024

How do I know if my GPU is available in PyTorch?

You can check if your GPU is available in PyTorch by using the command torch.cuda.is_available(). It returns True if a GPU is accessible, otherwise False.

Also read: What Is GPU In Computer? Ultimate Guide!

Find out if a GPU is available?

To determine GPU availability in PyTorch, use torch.cuda.is_available(). It returns True if a GPU is accessible, or False if not.

Find out the specifications of the GPUs?

You can retrieve GPU specifications in PyTorch using torch.cuda.get_device_properties(device_id), where device_id specifies the GPU. It provides details like name, memory, and compute capabilities.

Also read: Can Overclocking Damage GPU? Complete Guide!

Why is PyTorch not detecting my GPU?

PyTorch may not detect your GPU due to missing GPU drivers, incorrect CUDA installation, or incompatible hardware. Ensure drivers and CUDA are properly configured for GPU support.

Do I need to install CUDA for PyTorch?

No, CUDA is not required to run PyTorch, but it significantly accelerates GPU computations. You can use PyTorch without CUDA, but complex GPU tasks will be slower.

How do I list all currently available GPUs with pytorch?

To list all currently available GPUs in PyTorch, use torch.cuda.device_count() to get the total count and torch.cuda.get_device_name(i) for each GPU’s name.

Also read: What is a reference gpu? A Comprehensive Guide!

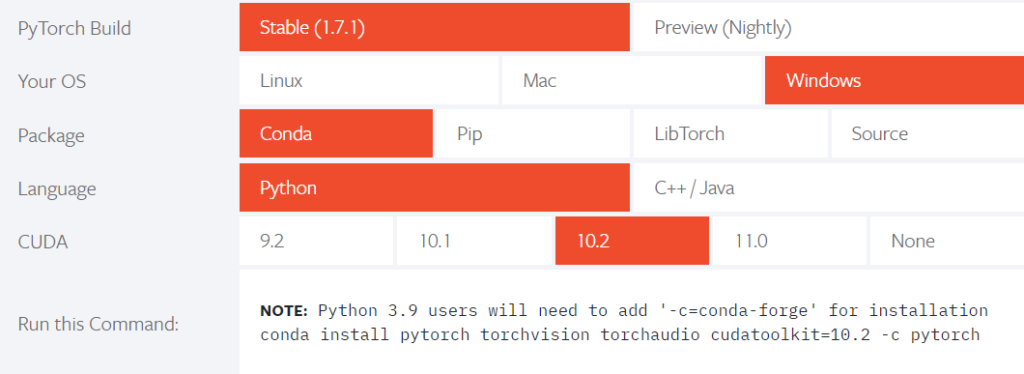

How to make code run on GPU on Windows 10?

To run code on GPU in Windows 10 with PyTorch, ensure you have compatible GPU and CUDA drivers, then use torch.cuda() to move tensors and models to the GPU.

How to run PyTorch on GPU by default?

To make PyTorch use the GPU by default, set the device to GPU with torch.device(“cuda”) or torch.cuda.set_device(device_id) at the beginning of your code.

Is a GPU available?

To check if a GPU is available, you can use the following Python code:

import torch

if torch.cuda.is_available():

print(“GPU is available.”)

else:

print(“No GPU found; using CPU.”)

This code uses PyTorch to check if a GPU is available and prints the corresponding message.

Use GPU in Your PyTorch Code?

To leverage GPU in PyTorch, ensure PyTorch is installed with GPU support (‘torch.cuda.is_available()‘). Move tensors to GPU using .'to('cuda')‘ or ‘tensor.cuda()‘. Update model and data handling accordingly for accelerated computations on GPU.

Find out the specifications of the GPU(s)?

To obtain GPU specifications, refer to the manufacturer’s website or documentation. Common details include model name, architecture, core clock speed, memory type, and size. For detailed technical information, consult product manuals or use system information tools like GPU-Z.

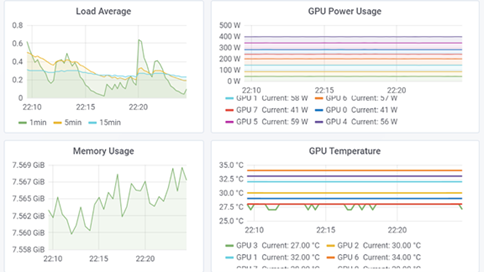

Monitoring You GPU Metrics:

Monitor GPU metrics using tools like MSI Afterburner, GPU-Z, or system utilities. Track parameters such as temperature, usage, clock speeds, and VRAM. Regular monitoring ensures optimal performance, helps identify potential issues, and allows for adjustments to enhance stability and efficiency in graphics-intensive tasks and applications.

Also read: Is 85 C Hot For GPU? Optimize your GPU temperature!

Frequently Asked Questions:

1. How to get available devices and set a specific device in Pytorch-DML?

In PyTorch-DML, you can get available devices with torch.dml.get_devices() and set a specific device using torch.dml.set_device(device).

2. Installing Pytorch with ROCm but checking if CUDA enabled ? How can I know if I am running on the AMD GPU?

To install PyTorch with ROCm, you can check for AMD GPU usage by running torch.cuda.is_available() . If it returns True, you are using an AMD GPU with PyTorch.

3. How to check if your pytorch / keras is using the GPU?

To check if PyTorch or Keras is using the GPU, use torch.cuda.is_available() for PyTorch or tf.config.list_physical_devices(‘GPU’) for TensorFlow/Keras. If available, it’s using the GPU.

4. Can I use PyTorch on a machine without a GPU?

Yes, PyTorch can be used on machines without a GPU. It will automatically fall back to using the CPU for computations.

5. What if I have multiple GPUs? How do I select a specific one?

You can use torch.cuda.set_device() to select a specific GPU by its index.

6. How To Check If PyTorch Is Using The GPU?

To check if PyTorch is using the GPU, use the command ‘torch.cuda.is_available()’. If True, PyTorch is configured to use the GPU.

7. Check if CUDA is Available in PyTorch?

To check if CUDA is available in PyTorch, use the command ‘torch.cuda.is_available()’. If the result is True, CUDA, and thus GPU support, is available.

8. How to check your pytorch / keras is using the GPU?

To check if PyTorch or Keras is using the GPU, use ‘torch.cuda.is_available()’ for PyTorch or ‘tf.config.list_physical_devices(‘GPU’)’ for Keras.

9. Not using GPU although it is specified?

If a specified GPU is not being utilized despite explicit instructions, several factors may contribute to the issue. Common causes include incorrect driver installations, incompatible CUDA versions, or conflicting library dependencies.

FINAL WORDS:

Checking GPU availability in PyTorch is essential for optimizing deep learning tasks. Use torch.cuda.is_available() to determine GPU presence. Ensure CUDA drivers and hardware compatibility for proper detection. You can also list available GPUs, set the default GPU, and use PyTorch on CPU-only machines. Utilizing GPUs boosts deep learning performance, but PyTorch can adapt to CPU environments as well.

Also read: How Much Gpu Utilization Is Normal? Ultimate Guide! What Is Normal Gpu Usage While Gaming? Optimize Now! How To Undervolt GPU? The Ultimate Guide! How Much GPU Should A Game Use? Complete Guide! Do GPU fans always spin? – Causes And Fixed – 2023 Is 60c Too Hot For GPU While Gaming? Ultimate Guide! What Is Idle GPU Temperature? Comprehensive Guide!